Program (June 18, 2018, Room 355 - C)

| 8:45 | Introduction (Andreas Geiger, Oliver Zendel) |

| 9:00 | Invited Talk (chair: Oliver Zendel) |

| - Judy Hoffman (UC Berkeley): Making our Models Robust to Changing Visual Environments | |

| 9:45 | Session 1: Stereo (chair: Daniel Scharstein) |

| - Yi Zhu (UCM): Stereo Matching Using Multi-scale Feature Constancy | |

| - Eddy Ilg (Uni Freiburg): Occlusions, Motion and Depth Boundaries with a Generic Network for Optical Flow, Disparity, or Scene Flow | |

| 10:30 | Coffee Break |

| 11:00 | Session 2: Multiview Stereo (Daniel Scharstein, Johannes Schönberger) |

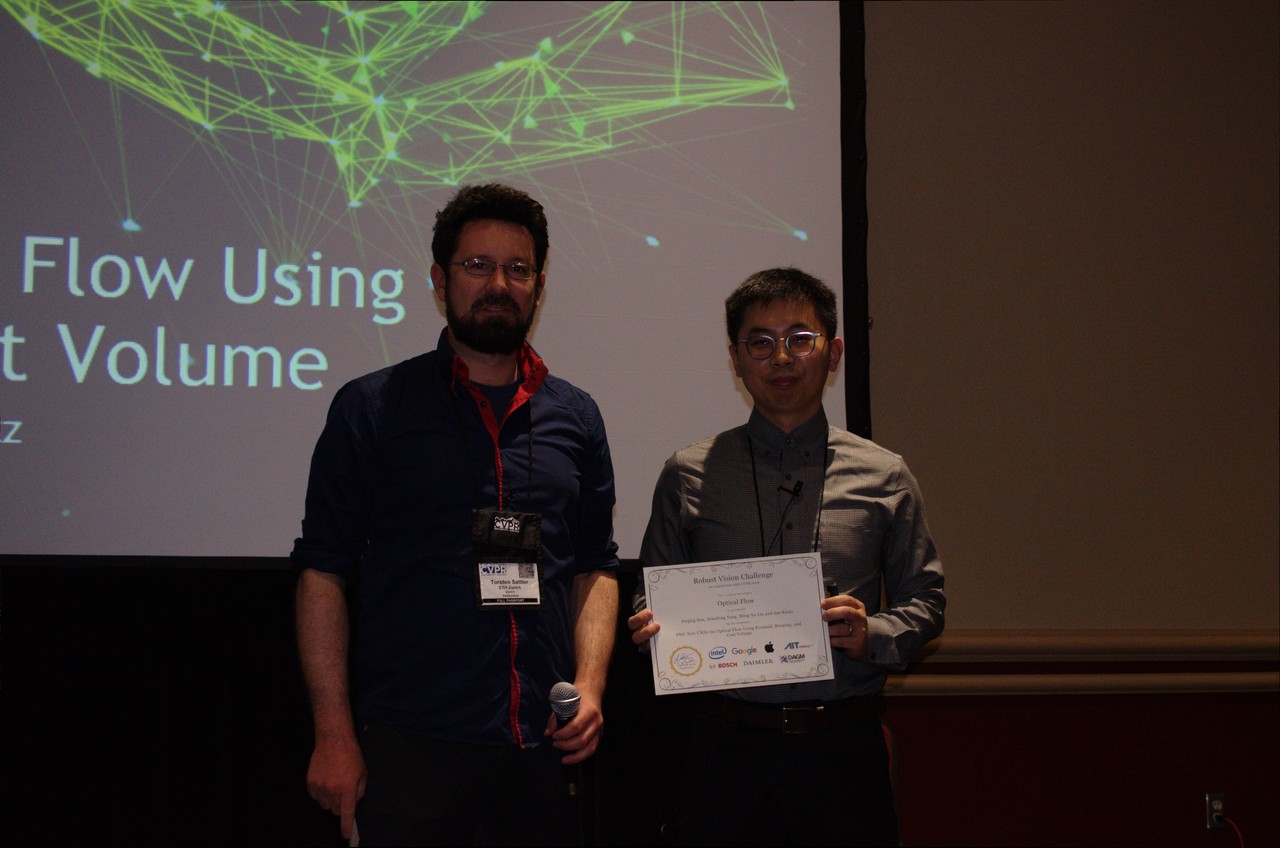

| 11:30 | Session 3: Optical Flow (chair: Torsten Sattler) |

| - Deqing Sun (NVIDIA): PWC-Net: CNNs for Optical Flow Using Pyramid, Warping, and Cost Volume | |

| - Daniel Maurer (Uni Stuttgart): ProFlow: Learning to Predict Optical Flow | |

| 12:15 | Lunch Break |

| 13:30 | Invited Talk (chair: Carsten Rother) |

| - Uwe Franke (Daimler AG): 30 Years Fighting for Robustness | |

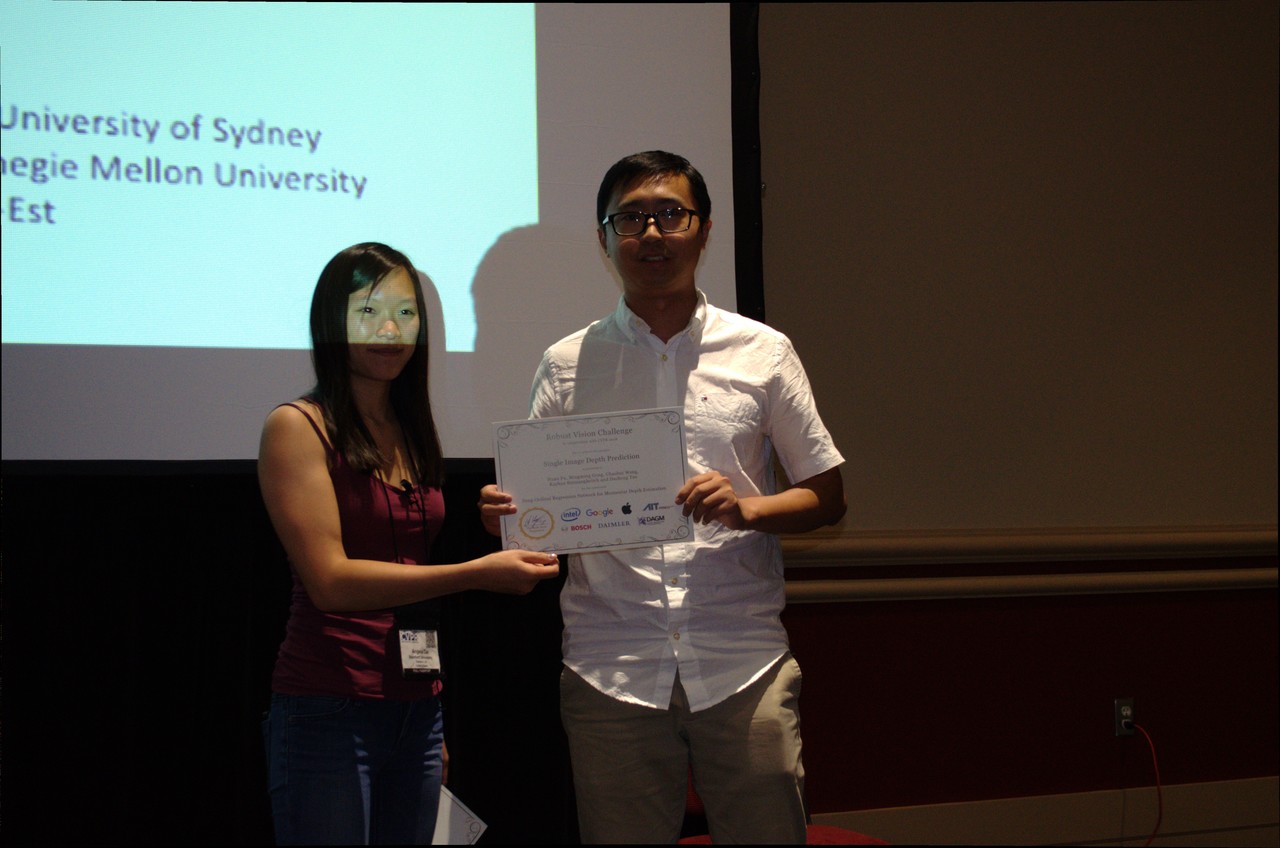

| 14:15 | Session 4: Single Image Depth Prediction (chair: Angela Dai) |

| - Mingming Gong (CMU): Deep Ordinal Regression Network for Monocular Depth Estimation | |

| - Ruibo Li (HUST): Deep Attention-based Classification Network for Robust Depth Prediction | |

| 15:00 | Session 5: Semantic Segmentation (chair: Matthias Niessner) |

| - Peter Samuel Rota Bulo (Mapillary): In-Place Activated BatchNorm for Memory-Optimized Training of DNNs | |

| - Marin Oršić (Uni Zagreb): Ladder-DenseNet Architecture for Robust Semantic Segmentation | |

| 15:45 | Coffee Break |

| 16:00 | Invited Talk (chair: Matthias Niessner) |

| - Stefan Roth (TU Darmstadt): Robust Scene Analysis: Energy-based models, deep learning, and something in between | |

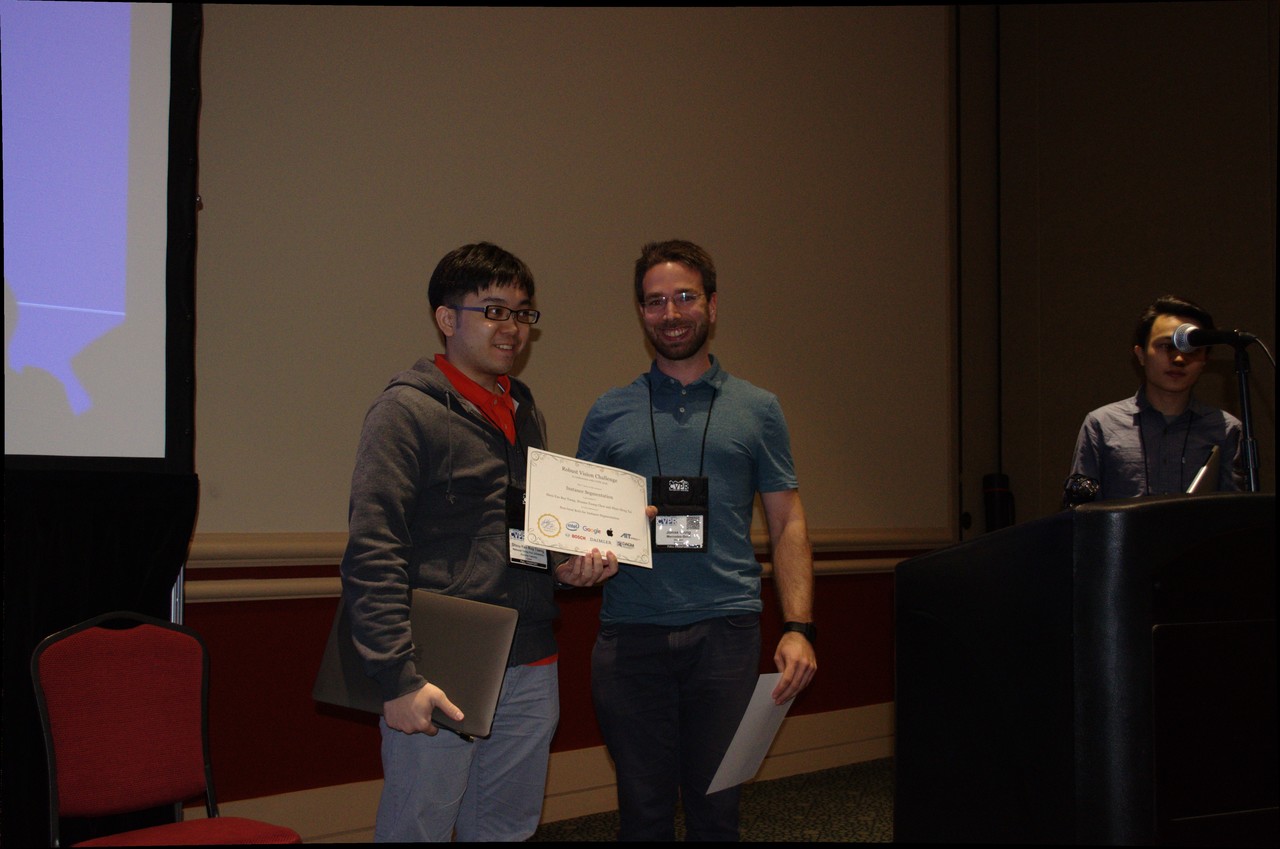

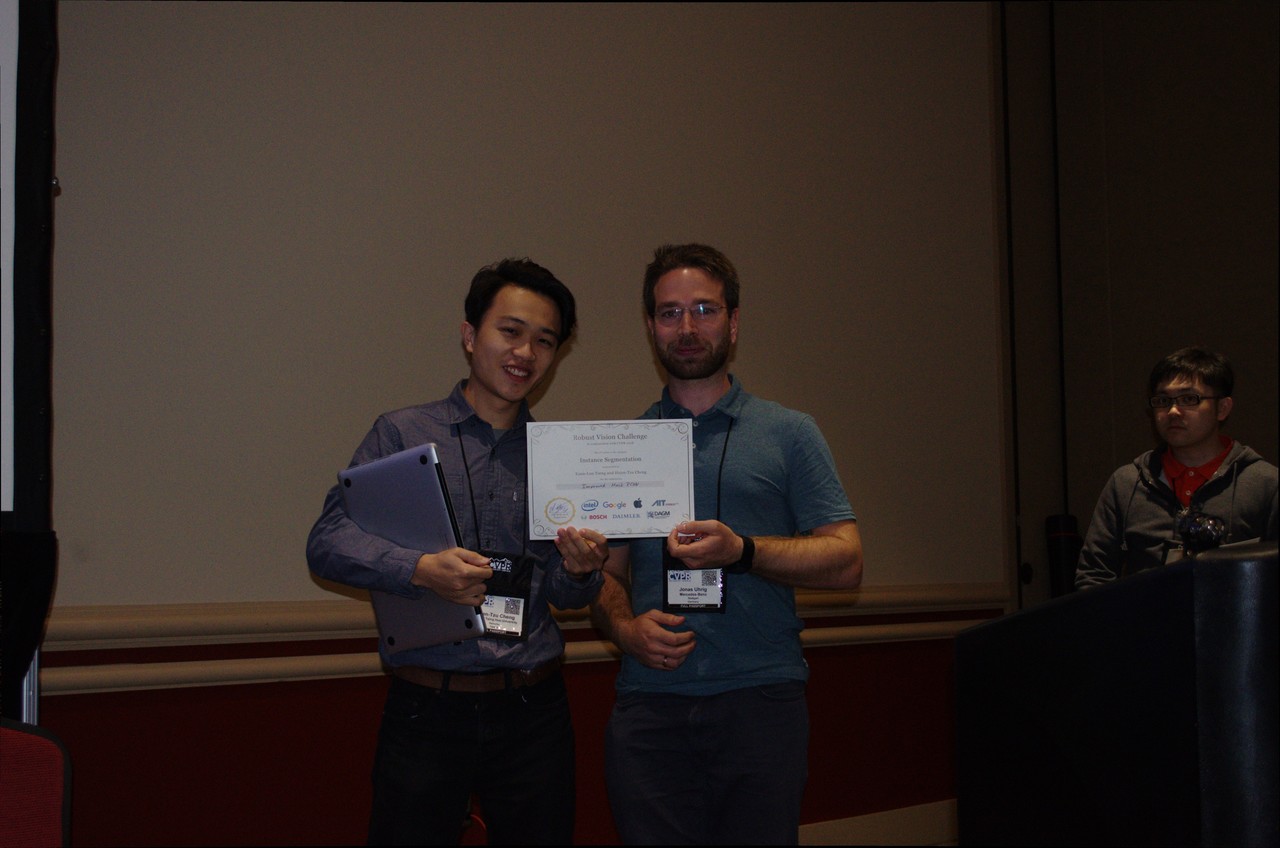

| 16:45 | Session 6: Instance Segmentation (chair: Jonas Uhrig) |

| - Shou-Yao Roy Tseng (NTHU): Non-local RoI for Instance Segmentation | |

| - Hsien-Tzu Cheng (NTHU): Improved MaskRCNN | |

| 17:30 | Discussion and Closing (Andreas Geiger) |

| 18:30 | Dinner (Winners, Sponsors, Organizers) |

Invited Speakers

Judy Hoffman is a postdoctoral researcher at UC Berkeley. Her research lies at the intersection of computer vision and machine learning with a specific focus on semi-supervised learning algorithms for domain adaptation and transfer learning. She received a PhD in Electrical Engineering and Computer Science from UC Berkeley in 2016. She is the recipient of the NSF Graduate Research Fellowship, the Rosalie M. Stern Fellowship, and the Arthur M. Hopkin award. She is also a founder of the WiCV workshop (women in computer vision) co-located at CVPR annually.

Uwe Franke received his Diploma degree and his PhD degree both in electrical communictions engineering from Aachen Technical University in 1983 and 1988. Since 1989 he is with Daimler Research and Development. He developed Daimler's lane departure warning system (Spurassistent) and has been working on stereo vision since 1996. Since 2000 he is head of Daimler's image understanding group. The algorithms developed by this group are the basis for Daimler's Stereo Camera based safety systems that are commercially available in mid- and upper class Mercedes Benz vehicles since 2013.

Stefan Roth received the Diplom degree in Computer Science and Engineering from the University of Mannheim, Germany in 2001. In 2003 he received the ScM degree in Computer Science from Brown University, and in 2007 the PhD degree in Computer Science from the same institution. Since 2007 he is on the faculty of Computer Science at Technische Universität Darmstadt, Germany (Juniorprofessor 2007-2013, Professor since 2013). His research interests include probabilistic and deep learning approaches to image modeling, motion estimation and tracking, object recognition, and scene understanding. He received several awards, including honorable mentions for the Marr Prize at ICCV 2005 (with M. Black) and ICCV 2013 (with C. Vogel and K. Schindler), the Olympus-Prize 2010 of the German Association for Pattern Recognition (DAGM), and the Heinz Maier-Leibnitz Prize 2012 of the German Research Foundation (DFG). In 2013, he was awarded a Starting Grant of the European Research Council (ERC).